Full-featured Lattice sensAI stack includes modular hardware platforms, neural network IP cores, software tools, reference designs, and custom design services from eco-system partners.

Flexible inferencing solutions optimized for power consumption from under 1mW-1W, package sizes starting at 5.5mm2, and priced from $1-$10 for high volume production.

Accelerates deployment of AI into a range of Edge applications including mobile, smart home, smart city, smart factory, and smart car products.

Hardware Platforms

Modular hardware platforms for rapid prototyping of ultra-low power machine learning inferencing designs for Edge applications.

- Video Interface Platform – ECP5 FPGA-based modular development platform featuring the award winning Embedded Vision Development Kit for AI designs requiring under 1W of power consumption. Flexible interface connectivity boards support MIPI CSI-2, embedded DisplayPort (eDP), HDMI, GigE Vision, USB 3.0 and more.

- Mobile Development Platform – iCE40 UltraPlus FPGA-based platform for AI designs requiring a few mWs of power consumption, offers a variety of on-board image sensors, microphones, compass/pressure/gyro sensors and more.

Lattice sensAI IP Cores

Neural network accelerator IP cores include support for convolution, pooling and fully connected network layers.

- Convolutional Neural Network (CNN) Accelerator – Fully parameterizable IP core optimized for ECP5 FPGA implementation, includes support for variable quantization for accuracy versus power consumption tradeoffs.

- Binarized Neural Network (BNN) Accelerator – Optimized for iCE40 UltraPlus FPGA, the accelerator supports the implementation of AI designs that allow a few mWs of power consumption.

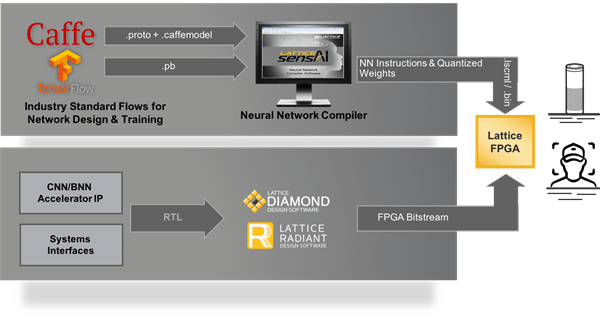

Software Tools

In addition to FPGA design software, such as Lattice Diamond and Lattice Radiant, the Lattice sensAI stack includes the new Neural Network Compiler tool for deployment of networks developed in Caffe or TensorFlow into Lattice FPGAs – no prior RTL experience required.

- Neural Network Compiler – Rapidly analyze, simulate, and compile various networks for implementation onto Lattice CNN/BNN Accelerator IP cores.

Reference Designs and Demos

The Lattice sensAI stack includes reference designs and demos that utilize the hardware platforms, IP cores, and software tools to implement common AI functionality including:

Custom Design Services

The Lattice sensAI stack includes an ecosystem of select, worldwide design service partners that can deliver custom solutions for a range of end-applications, including mobile, smart home, smart city, smart factory, and smart cars. Click here for more information on Lattice sensAI certified partners.